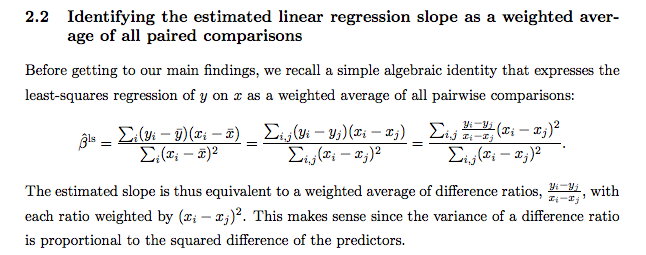

Wow, this is pretty cool:

From an Andrew Gelman article on summaring a linear regression as a simple difference between upper and lower categories. I get the impression there are lots of weird misunderstood corners of linear models… (e.g. that “least squares regression” is a maximum likelihood estimator for a linear model with normal noise… I know so many people who didn’t learn that from their stats whatever course, and therefore find it mystifying why squared error should be used… see this other post from Gelman.)