I’m a bit late blogging this, but here’s a messy, exciting — and statistically validated! — new online data source.

My friend Roddy at Facebook wrote a post describing their sentiment analysis system, which can evaluate positive or negative sentiment toward a particular topic by looking at a large number of wall messages. (I’d link to it, but I can’t find the URL anymore — here’s the Lexicon, but that version only gets term frequencies but no sentiment.)

How they constructed their sentiment detector is interesting. Starting with a list of positive and negative terms, they had a lexical acquisition step to gather many more candidate synonyms and misspellings — a necessity in this social media domain, where WordNet ain’t gonna come close! After manually filtering these candidates, they assess the sentiment toward a mention of a topic by looking for instances of these positive and negative words nearby, along with “negation heuristics” and a few other features.

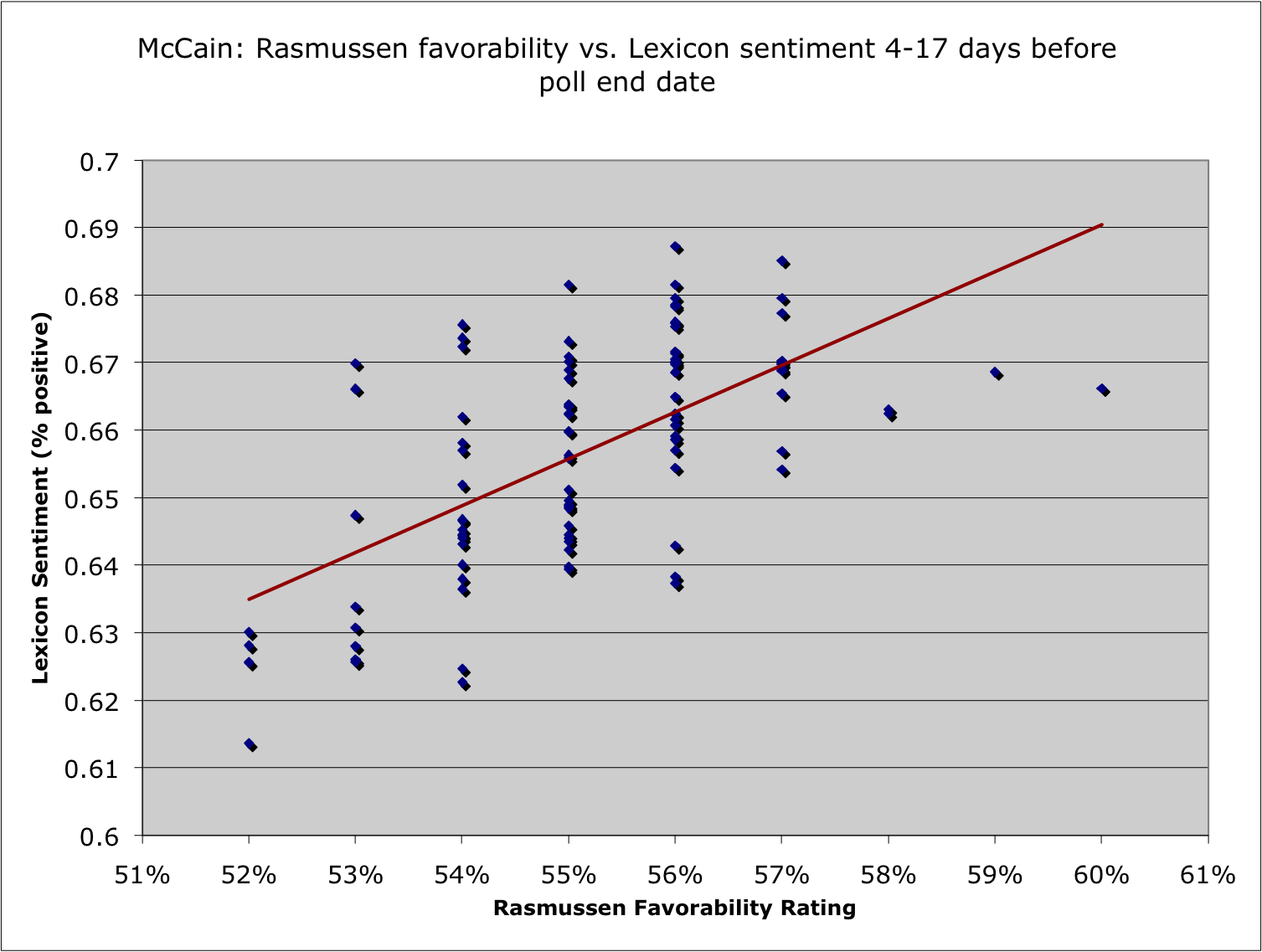

He describes the system as high-precision and low-recall. But this is still useful: he did an evaluation against election opinion polls, and found the system’s sentiment scores could predict moves in the polls! It’s more up to date information than waiting for the pollsters to finish and report their polling.

With a few more details to ensure the analysis is rigorous, I think this is a good way to validate whether an NLP or other data mining system is yielding real results: try to correlate its outputs with another, external data source that’s measuring something similar. Kind of like semi-supervised learning: the entire NLP system is like the “unsupervised” component, producing outputs that can be calibrated to match a target response like presidential polls, search relevance judgments, or whatever. You can validate SVD in this way too.

I wanted to comment on a few points in the post:

We got > 80% precision with some extremely simple tokenization schemes, negation heuristics, and feature selection (throwing out words which were giving us a lot of false positives). Sure, our recall sucked, but who cares…we have tons of data! Want greater accuracy? Just suck in more posts!

Let’s be a little careful what “greater accuracy” means here… I agree that high-precision, low-recall classifiers can definitely be useful in a setting where you have a huge volume of data, so you can do statistically significant comparisons between, say, “obama” vs “mccain” detected sentiment and see changes over time. However, there can be a bias depending on what sort of recall errors get made. If your detector systematically ignores positive statements for “obama” more so than “mccain” — say, Obama supporters use a more social media-ish dialect of English that was harder to extract new lexical terms for — then you have bias in the results. Precision errors are always easy to see, but I think these recall errors can be difficult to assess without making a big hand-labeled corpus of wall posts.

(Another example of high-precision, low-recall classifiers are the Hearst patterns for hypernymy detection; take a look at page 4 of Snow et al 2005. I wonder if hand-crafted pattern approaches are always high-precision, low-recall. If that’s so, there should be more work to figure out how to practically use them — thinking of them as noisy samplers — since they’ll always be a basic approach to solving simple information extraction problems.)

And,

Had we done things “the right way” and set about extracting the sentiment terms from a labeled corpus, we would have needed a ton more hand-labeled data. That costs time and money; far better to bootstrap and do things quick, dirty and wrong.

When was this ever “the right way”? This certainly is a typical way for researchers to approach these problems, since they usually rely on someone like the LDC to release labeled corpora. (And therefore control their research agendas, but that’s another rant for another post. But then at least everyone can compare their work to each other.) Also, note that hand-labeled data is way cheaper and easier to obtain than it used to be.

In any case, final results are what matter and there’s some evidence this two-step technique can get them. The full post: Language Wrong – Predicting polls with Lexicon.

Brendan, Thanks for your insights! Your point is well taken about recall errors. The feature and model selection was done on a labeled corpus of 5,000 wall posts about 6 different topics. I haven’t looked at the relative performance for each of those topics but it seems obvious now that I need to do that.

The problem is that if there is some classifier bias in different domains, we would need to train the model on hand-labeled data for each domain. If the number of topics we are interested in is small, this could be solved with crowdsourced labeling, as you suggest. But how would we scale to hundreds of thousands of topics (i.e. all words and bigrams, or all entities in Freebase)? That’s an interesting problem that warrants some thought.

Thanks for the post. I stumbled across this while trying to implement some rudimentary sentiment analysis on Facebook status updates — I’m a programmer by training, but never done any ML. Great writeup, I especially like the part about validating the algorithm using an external, supposedly-correlated event.

Pingback: Facebook, Happiness & The User Data Black Market | Tech News Ninja

Pingback: Facebook, Happiness & The User Data Black Market

Pingback: the hive » Facebook, Happiness & The User Data Black Market

Pingback: Facebook, Happiness & The User Data Black Market | DAILY BREAKING NEWS UPDATE

Pingback: Facebook, le bonheur, et le marché noir des données utilisateurs | ReadWriteWeb France