I was just looking at some papers from the SANCL-2012 workshop on web parsing from June this year, which are very interesting to those of us who wish we had good parsers for non-newspaper text. The shared task focus was on domain adaptation from a setting of lots of Wall Street Journal annotated data and very little in-domain training data. (Previous discussion here; see Ryan McDonald’s detailed comment.) Here are some graphs of the results (last page in the Petrov & McDonald overview).

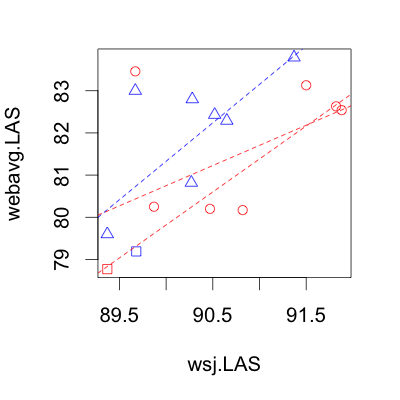

I was most interested in whether parsing accuracy on the WSJ correlates to accuracy on web text. Fortunately, it does. They evaluated all systems on four evaluation sets: (1) Text from a question/answer site, (2) newsgroups, (3) reviews, and (4) Wall Street Journal PTB. Here is a graph across system entries, with the x-axis being the labeled dependency parsing accuracy on WSJPTB, and the y-axis the average accuracy on the three web evaluation sets. Note the axis scales are different: web accuracies are much worse than WSJ.

There are two types of systems here: direct dependency parsers, and constituent parsers whose output is converted to dependencies then evaluated. There’s an interesting argument in the overview paper that the latter type seems to perform better out-of-domain, perhaps because they learn more about latent characteristics of English grammatical structure that transfer between domains. To check this, I grouped on this factor: blue triangles are constituents-then-convert parsers, and the red circles are direct dependency parsers. (Boxes are the two non-domain-adapted baselines.) The relationship between WSJ versus web accuracy can be checked with linear regression on the subgroups. The constituent parsers are higher up on the graph; so indeed, the constituent parsers have higher web accuracy relative to their WSJ accuracy compared to the direct dependency parsers. A difference in the slopes might also be interesting, but the difference between them is mostly driven by a single direct-dependency outlier (top left, “DCU-Paris13″); excluding that, the slopes are quite similar. Actually, this example probably shouldn’t count as a direct-dependency system, because it’s actually an ensemble including constituency conversion as a component (if it is “DCU-Paris13-Dep” here). In any case, lines with and without it are both shown.

Since the slopes are similar, we shouldn’t need varying-slopes hierarchical regression to analyze the differences, so just throw it all in to one regression (webacc ~ wsjacc + ConstitIndicator); so constituent parsers get an absolute 1.6% better out-of-domain accuracy compared to dependency parsers with the same WSJ parsing accuracy. (This is excluding DCU-Paris13.) It’s not clear if this is due to better grammar learning, or if it’s due to an issue with SD giving bad conversions on web text.

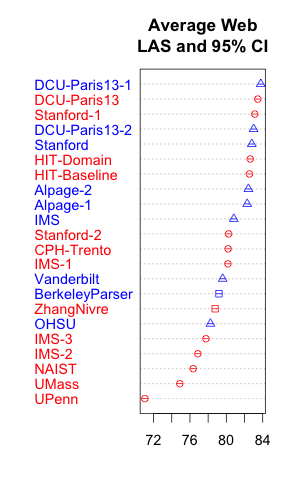

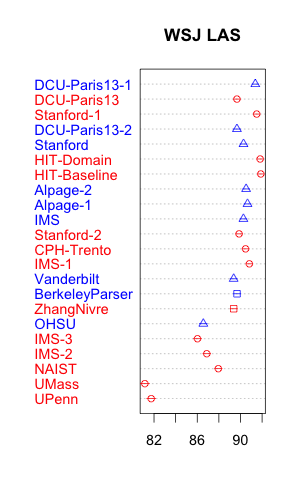

To see the individual systems’ names, here are both sets of numbers. These include all systems; for the scatterplot above I excluded the ones that performed worse than the non-domain-adapted baselines. (Looking at those papers, they seemed to be less elaborately engineering-heavy efforts; for example, the very interesting Pitler paper focuses on issues in how the representation handles conjunctions.)

CI’s can’t be seen on those skinny graphs, but they’re there; computed via binomial normal approximations using the number of tokens (i.e. assuming correctness is independent at the token level — though probably it should have sentence-level or doc-level grouping, of course, so they’re tighter than they should be.)

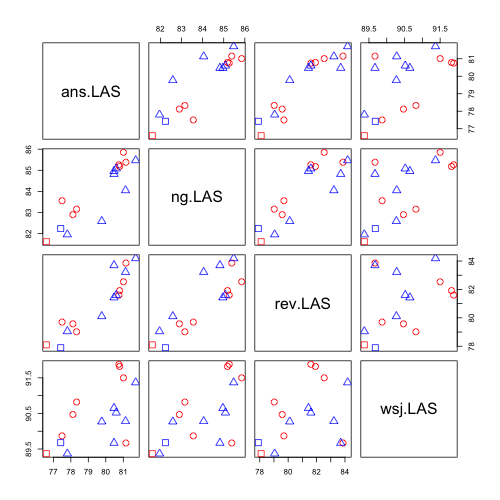

Finally, this is less useful, but here’s the scatterplot matrix of all four evaluation sets (excluding worse-than-baseline systems again).

“I was most interested in whether parsing accuracy on the WSJ correlates to accuracy on web text. Fortunately, it does.” I don’t know if the correlation is significant or not, but note that even if it is, that does not mean we can just go ahead and optimize our parsers on WSJ. When we look at comparable systems, e.g., HIT-Baseline and HIT-System, we typically see that the in-domain performance of the adapted system drops a little. So while there may be a correlation between how good and bad parsers perform across domains, there is typically no correlation between in- and out-domain performance when we finetune parameters within a system.