Update (3/14/2010): There is now a TweetMotif paper.

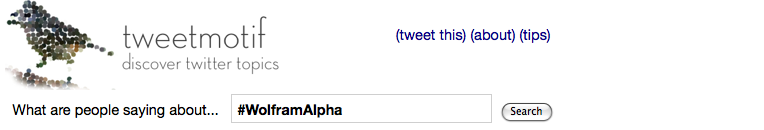

Last week, I, with my awesome friends David Ahn and Mike Krieger, finished hacking together an experimental prototype, TweetMotif, for exploratory search on Twitter. If you want to know what people are thinking about something, the normal search interface search.twitter.com gives really cool information, but it’s hard to wade through hundreds or thousands of results. We take tweets matching a query and group together similar messages, showing significant terms and phrases that co-occur with the user query. Try it out at tweetmotif.com. Here’s an example for a current hot topic, #WolframAlpha:

It’s currently showing tweets that match both #WolframAlpha as well as two interesting bigrams: “queries failed” and “google killer”. TweetMotif doesn’t attempt to derive the meaning or sentiment toward the phrases — NLP is hard, and doing this much is hard enough! — but it’s easy for you to look at the tweets themselves and figure out what’s going on.

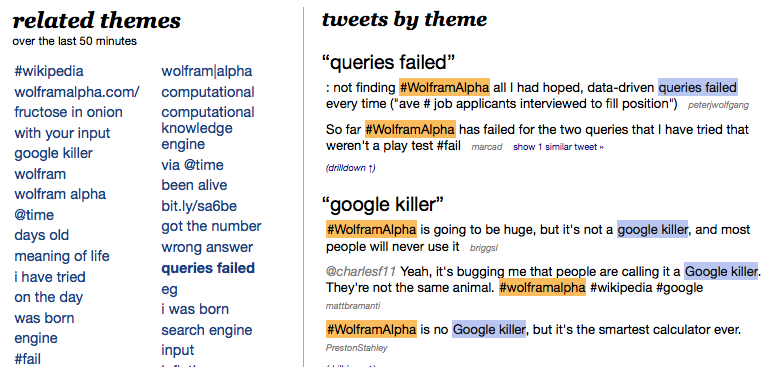

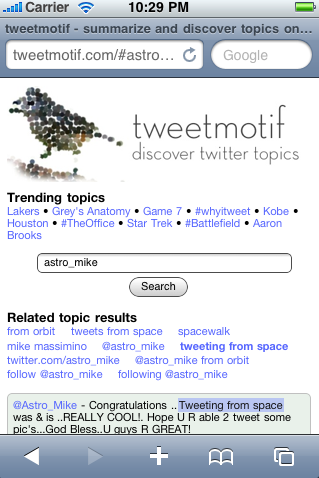

Here’s another fun example right now, a query for Dollhouse:

I love that the #wolframalpha topic has “infected” the dollhouse space. Someone pointed out a connection between them, but really they’re connected through bot spam. TweetMotif’s duplicate detection algorithm found 22 messages here where each is basically a list of all the trending topics. This seems to be a popular form of twitter spambots.

I learned a ton making this system, and I’ll try to write more about the technical details in a future post. It’s interesting to hear people speculate on how it works; everyone gives a different answer. I guess this goes to show you that search/NLP is still a pretty unsettled, not-completely-understood area.

There are lots of interesting TweetMotif examples. More prosaic, less news-y queries like sandwich yield cool things like major ingredients of sandwiches and types of sandwiches. (These are basically distributional similarity candidates for synonym and meronym acquisition, though a bit too noisy to use in its current form.) And in a few cases, like for understanding currently unfolding events, TweetMotif might even be useful! It would be nice to expand the set of usefully served queries. We’re occasionally posting interesting queries at twitter.com/tweetmotif.

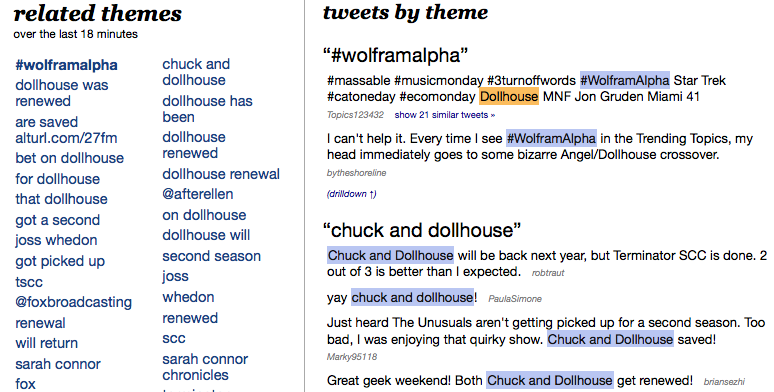

And oh yeah. We have a beautiful iPhone interface!

Check it out folks. This is a functional prototype, so you can play with it right now at tweetmotif.com.

There are lots of ways to build this app. In fact, we were just talking to a customer about building something query refinement by phrase suggestion, which is very similar technically. My fave implementation of this idea is scirus.com (by FAST and Elsevier) which gives you suggestions in “refine your search”.

First, do significant phrase extraction. You can either do this per query result set (very expensive, but much better results if you have enough results) or statically (perhaps with updates). Best is to do it versus some background corpus (e.g. Wikipedia), but plain old collocation extraction is OK. Then index the phrases along with the tweets.

At run time, when you search, you pull back the sig phrases in the tweets as well as the results, so you get a set of tweets and a tweet-phrase relation. You could either do some kind of fancy dynamic-programming optimization to get an optimal partition by phrase according to some metric (diversity/coherence — see below), or you could just report the top few results and accept some redundancy (realizing that a phrase appearing in all or most hits isn’t useful in organizing results).

The real trick for this application is optimizing diversity (low similarity among the clusters) and high coherence (high similarity of tweets within a cluster). Right now, tweemotif’s not doing a very good job of this (clusty.com also has issues — it’s a tough problem).

Another thing you might consider for tweetmotif is restricting results to phrases like noun phrases; getting “by the black” is wrong phrasally for a search on the band “Black Keys”, and plain old “-” is just wrong, which I got as a term for search ‘Raveonettes’. And “flu” pulled back “h1n1 flu”, “swine flu”, and “case of h1n1″, which just isn’t diverse enough. I had better luck on the drilldowns for topics like “banh mi”. You should probably also pay attention to Twitter conventions like “#” for tags.

Tthanks for the comments. Significant phrases are extracted against a general twitter corpus by comparing likelihoods under the two corpus’ language models.

There is something of a runtime partitioning scheme as you mentioned. Tweetmotif has a very strong coherence constraint: a theme label MUST appear in all of the messages within that theme. (I think for analytic purposes, it’s nice to minimize hidden variables and be transparent.) We start by just taking all the significant phrases as clusters, then treat it as a deduplication problem (kinda like agglomerative clustering) to reduce redundancy. Cluster merges are only allowed to keep messages in the *intersection* — not the union as in agglomerative clustering — so they’re only done when only a small number of messages would be lost in a merge (e.g. the cluster-cluster jaccard similarity is high). Then there’s a decision for what label to use for the new cluster; sometimes we end up with a skip n-gram as the label, which is really neat.

This is a pretty conservative as far as redundancy-reducing goes and there’s still a lot left, as you point out. But it’s *much* better than just throwing up the top-k phrases :)

The correct way to do syntactic filtering for significant phrases is an interesting problem. Restricting to NPs and adjectives is definitely a possibility. I thought certain PP’s and verbs can be pretty interesting, but they’re sometimes mundane. Sometimes things that look like punctuation are informative … for example when a “+” comes up, it’s usually because it’s being used in a “X + Y = Z” construction, e.g. “sunshine + park = happiness”. The unicode music symbol ♫ is another common but interesting token.

We thought the first version of this should err on the side of keeping as much as possible. (wouldn’t it be nice to have a magical completely unsupervised analysis system with no biases implied by the implementor? :) ) Well, there is already one important filtering step. We take the raw n-grams then throw out ones that look like they cross syntactic boundaries — e.g. ones that contain a right-binding function word like “the” at the rightmost position. Results are much worse without this step…

The system’s overall result quality is much better on non-news topics like “sandwich”. I’m not sure why — it might be because, on news topics, a small set of things are said in many different ways, and tweetmotif fails to abstract over them and ends up with too much redundancy.

Twitter conventions are really interesting. Hashtags are treated specially: they are only allowed to be unigrams and never allowed to join anything else as an n-gram, since usually they have unordered tag-like set semantics. (There are some instances where that doesn’t hold .. “i went to the store and bought a #macintosh and bla…” but they seem rare.) Also @-replies are treated specially too. Everything still boils boils down to textual n-grams which is better than nothing, but not quite right, of course. There’s very obvious structured information here.

Oh yeah and on hashtags. I personally think their semantics are kind of the same thing as a set-of-words view of tweets. (If you had good NLP, you shouldn’t need tags for textual data, right? — especially when tags contribute to the 140 limit just as much as normal text!) Everyone else seems to think they’re important though and that they should be linkified in the UI for a new search and such.

Hashtags do have one very important aspect: people use them when they intentionally want their message to be grouped with other messages using that same hashtag. That alone makes them worthy of more in-depth special treatment.

18 Jun 2007 Just about everyone who watches sports agrees that ESPN’s Erin Andrews is smoking hot. If you don’t know Erin, she is the sideline reporter Erin Andrews Peep Video Vid Caps P. Continue Reading ” Erin Andrews’ stalker gets 27-month prison term Former Florida Gators dazzler and current ESPN sideline reporter Erin Andrews has signed on to be a. All the latest on Erin Andrews, including pics and videos, is at Chickipedia. Discover vital facts about Erin Andrews : she was born on as Erin Andrews. Erin Andrews – Wikipedia, the free encyclopedia. Erin Andrews (born May 4, 1978) is an Erin Andrews Dazzlers. Jill Arrington Pictures. Pagination. 1. 2. 3. 4. 5. What many people may not know is that Erin was a “Dazzler” at her time in Florida? Dancing with the Stars 2010 Cast: Erin Andrews won’t be a victim. Watch Erin Andrews Peephole Video, Erin Andrews is getting the Paris Hilton effect. Erin Andrews’ “Dancing with the Stars” efforts continued Monday night. JoeSportsFan.com MediaSpace Page For Erin Andrews.

see more:

Erin Andrews Peephole Images

Erin Andrews Peephole VideoErin Andrews Peep Camera

Why did you take http://www.tweetmotif.com/ down?

I wanted to try it.

It was too annoying to maintain :)