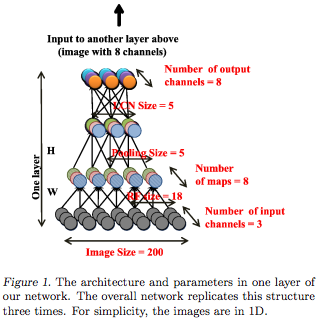

There was an interesting ICML paper this year about very large-scale training of deep belief networks (a.k.a. neural networks) for unsupervised concept extraction from images. They (Quoc V. Le and colleagues at Google/Stanford) have a cute example of learning very high-level features that are evoked by images of cats (from YouTube still-image training data); one is shown below.

For those of us who work on machine learning and text, the question always comes up, why not DBN’s for language? Many shallow latent-space text models have been quite successful (LSI, LDA, HMM, LPCFG…); there is hope that some sort of “deeper” concepts could be learned. I think this is one of the most interesting areas for unsupervised language modeling right now.

But note it’s a bad idea to directly analogize results from image analysis to language analysis. The problems have radically different levels of conceptual abstraction baked-in. Consider the problem of detecting the concept of a cat; i.e. those animals that meow, can be our pets, etc. I hereby propose a system that can detect this concept in text, and compare it to the image analysis DBN as follows.

| Problem | Concept representation | Concept detector | Cost to create concept detector |

|---|---|---|---|

| Image analysis |

|

|

1,152,000 CPU-hours to train neural network

$61,056 at current GCE prices |

| Language analysis |

cat a.k.a. |

"cat" in

re.split('[^a-z]', text.lower())

|

147 CPU-microseconds to compile finite-state network /[^a-z]/

$0.000078 at GCE prices |

I mean: you can identify the concept “cat” by tokenizing a text, i.e. breaking it up into words, and looking for the word “cat”. To identify the “cat” concept from a vector of pixel intensities, you have to run through a cascade of filters, edge detectors, shape detectors and more. This paper creates the image analyzer with tons of unsupervised learning; in other approaches you still have to train all the components in your cascade. [Note.]

In text, the concept of “cat” is immediately available on the surface — there’s a whole word for it. Think of all the different shapes and types of cats which you could call a “cat” and be successfully understood. Words are already a massive dimension reduction of the space of human experiences. Pixel intensity vectors are not, and it’s a lot of work to reduce that dimensionality. Our vision systems are computational devices that do this dimension reduction, and they took many millions of years of evolution to construct.

In comparison, the point of language is communication, so it’s designed, at least a little bit, to be comprehensible — pixel intensity vectors do not seem to be have such a design goal. ["Designed."] The fact that it’s easy to write a rule-based word extractor with /[^a-zA-Z0-9]/ doesn’t mean bag-of-words or n-grams are “low-level”; it just means that concept extraction is easy with text. In particular, English has whitespace conventions and simple enough morphology that you can write a tokenizer by hand, and we’ve designed character encoding standards let computers unambiguously map between word forms and binary representations.

Unsupervised cross-lingual phonetic and morphological learning is closer, cognitive-level-of-abstraction-wise, to what the deep belief networks people are trying to do with images. To make a fairer table above, you might want to compare to the training time of an unsupervised word segmenter / cross-lingual lexicon learner.

[Another aside: The topic modeling community, in particular, seems to often mistakenly presume you need dimension reduction to do anything with text. Every time you run a topic model you're building off of your rule-based concept extractor -- your tokenizer -- which might very well be doing all the important work. Don't forget you can sometimes get great results with just the words (and phrases!), for both predictive and exploratory tasks. Getting topics can also be great, but it would be nice to have a better understanding exactly when or how they're useful.]

This isn’t to say that lexicalized models (be they document or sequence-level) aren’t overly simple or crude. Just within lexical semantics, it’s easy to come up with examples of concepts that “cat” might refer to, but you want other words as well. You could have synonyms {cat, feline}, or refinements {cat, housecat, tabby} or generalizations {cat, mammal, animal} or things that seem related somehow but get tricky the more you think about it {cat, tiger, lion}. Or maybe the word “cat” is a part of a broad topical constellation of words {cat, pets, yard, home} or with an affective aspect twist {cat, predator, cruel, hunter} or maybe a pretty specific narrative frame {cat, tree, rescue, fireman}. (I love how ridiculous this last example is, but we all instantly recognize the scenario it evokes. Is this an America-specific cultural thing?)

If you want to represent and differentiate between the concepts evoked by these wordsets, then yes, the bare symbol “cat” is too narrow (or too broad), and maybe we want something “deeper”. So what does “deep learning” mean? There’s a mathematical definition in the largeness of the class of functions these models can learn; but practically when you’re running these things, you need a criterion for how good of concepts you’re learning, which I think the rhetoric of “deep learning” is implicitly appealing to.

In the images case, “deep” seems to mean “recognizable concepts that look cool”. (There’s room to be cynical about this, but I think it’s fine when you’re comparing to things that are not recognizable.) In the text case, if you let yourself use word-and-ngram extraction, then you’ve already started with recognizable concepts — where are you going next? (And how do you evaluate?) One interesting answer is, let’s depart lexical semantics and go compositional; but perhaps there are many possibilities.

Note on table: Timing of regex compilation was via IPython %timeit re.purge();re.compile(‘[^a-z]‘). Also I’m excluding human costs — hundreds (thousands?) of hours from 8 CS researcher coauthors (and imagine how much Ng and Dean cost in dollars!), versus whatever skill level it is to write a regex. The former costs are justified given it is, after all, research; the regex works well because someone invented all the regular expression finite-state algorithms we now take for granted. But there are awfully good reasons there was so much finite-state research decades ago: they’re really, really useful for processing symbolic systems created by humans; most obviously artificial programming languages designed that way, but also less strict quasi-languages like telephone number formats, and certain natural language analysis tasks like tokenization and morphology…

“Design:” …where we can define “design” and “intention” in a Herbert Simon sort of way to mean “part of the optimization objective of either cultural or biological evolution”; i.e. aspects of language that don’t have good communicative utility might be go away over time, but the processes that that give us light patterns hitting our retina are quite exogenous, modulo debates about God or anthropic principles etc.

The space of words is absurdly high dimensional. This paper deals with a mere 120,000 dimensions (200×200 pixel rgb images). The number of words in English isn’t really well defined but it’s certainly more than 120k. And that’s just to represent one word! The space of sentences is exponentially larger.

Words aren’t dimensionality reduction, they’re just a particularly interpretable “basis” (really a frame but that’s not important).

I can also recognize the frame in {cat, tree, rescue, fireman} immediately; I guess such things are pretty common now. I agree that directly drawing analogy between CV and NLP might be dangerous; but you are drawing your own analogy (character pixel. etc.), right?

Just get chance to read your blog. A lot of interesting stuff :) so I subscribed your blog in my Google Reader — under the category of ML, not Stat though (Andrew Gelman is there). If you resist, I could possibly change XD