Update (3/14/2010): There is now a TweetMotif paper.

Last week, I, with my awesome friends

David Ahn and Mike Krieger, finished hacking together an experimental prototype,

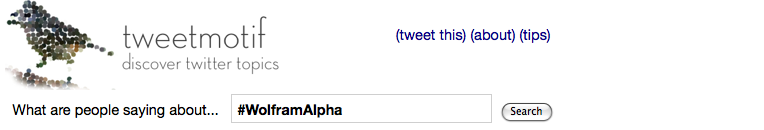

TweetMotif, for exploratory search on Twitter. If you want to know what people are thinking about something, the normal search interface

search.twitter.com gives really cool information, but it’s hard to wade through hundreds or thousands of results. We take tweets matching a query and group together similar messages, showing significant terms and phrases that co-occur with the user query. Try it out at

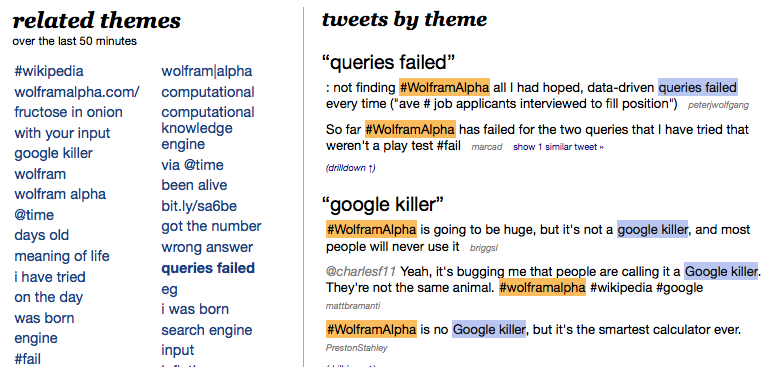

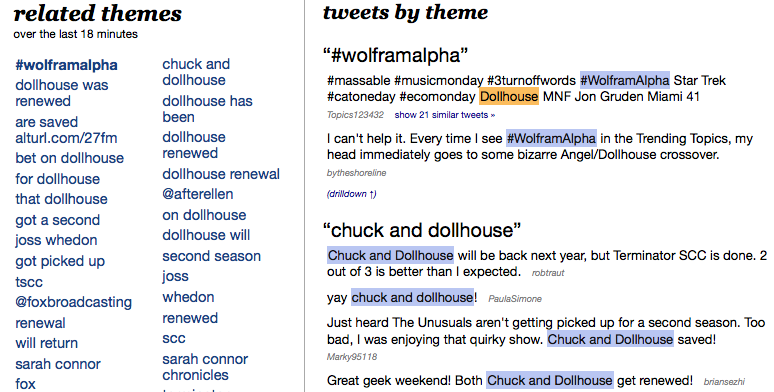

tweetmotif.com. Here’s an example for a current hot topic,

#WolframAlpha:

It’s currently showing tweets that match both #WolframAlpha as well as two interesting bigrams: “queries failed” and “google killer”. TweetMotif doesn’t attempt to derive the meaning or sentiment toward the phrases — NLP is hard, and doing this much is hard enough! — but it’s easy for you to look at the tweets themselves and figure out what’s going on.

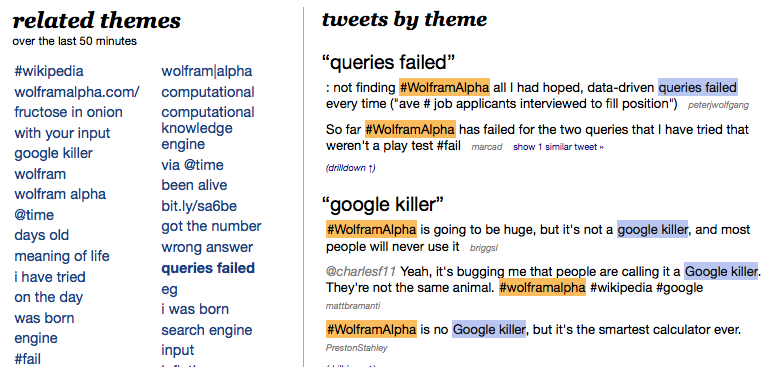

Here’s another fun example right now, a query for Dollhouse:

I love that the #wolframalpha topic has “infected” the dollhouse space. Someone pointed out a connection between them, but really they’re connected through bot spam. TweetMotif’s duplicate detection algorithm found 22 messages here where each is basically a list of all the trending topics. This seems to be a popular form of twitter spambots.

I learned a ton making this system, and I’ll try to write more about the technical details in a future post. It’s interesting to hear people speculate on how it works; everyone gives a different answer. I guess this goes to show you that search/NLP is still a pretty unsettled, not-completely-understood area.

There are lots of interesting TweetMotif examples. More prosaic, less news-y queries like sandwich yield cool things like major ingredients of sandwiches and types of sandwiches. (These are basically distributional similarity candidates for synonym and meronym acquisition, though a bit too noisy to use in its current form.) And in a few cases, like for understanding currently unfolding events, TweetMotif might even be useful! It would be nice to expand the set of usefully served queries. We’re occasionally posting interesting queries at twitter.com/tweetmotif.

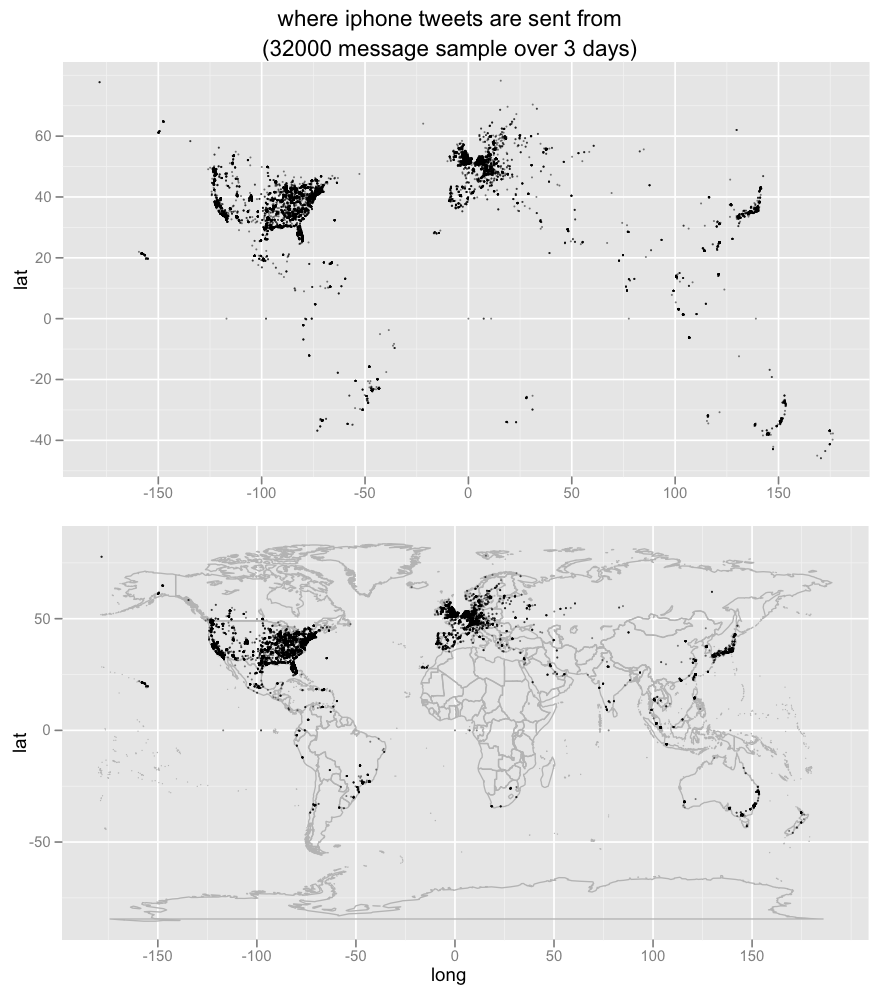

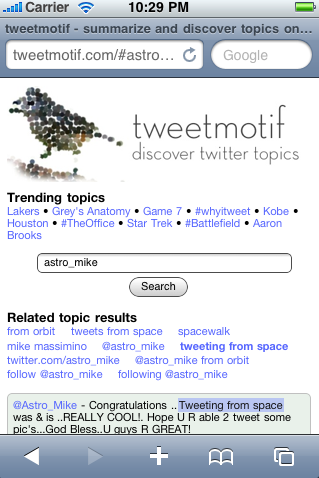

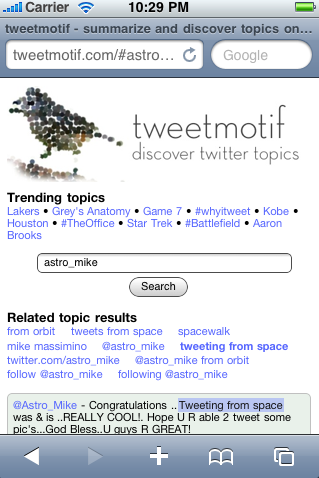

And oh yeah. We have a beautiful iPhone interface!

Check it out folks. This is a functional prototype, so you can play with it right now at tweetmotif.com.